Climate models are the main tool scientists use to assess how much the Earth’s temperature will change given an increase in fossil fuel pollutants in the atmosphere. As a climate scientist, I’ve used them in all my research projects, including one predicting a change in Southwestern US precipitation patterns. But how exactly did climate models come to be?

Behind climate models today lie decades of both scientific and computer technological advancement. These models weren’t created overnight—they are the cumulative work of the world’s brightest climate scientists, mathematicians, computer scientists, chemists, and physicists since the 1940s.

Believe it or not, climate models are actually part of the driving force for the advancement of computers! Did you know that the second purpose for the world’s first electronic computer was to make a weather forecast? Predicting the weather and climate is a complex problem that combines computer science and physics. It is a form of applied mathematics that unites so many different fields.

This is the first blog in a three-part series. This first one focuses on the history of climate models; the second will discuss what a climate model is and what is it used for; and in the third blog I will explore how climate scientists integrate the rapidly-changing field of Machine Learning into climate science.

Predicting the weather and climate is a physics problem

Scientists hypothesized early on that we could predict the weather and longer-term climate using a set of equations that describe the motion and energy transfer of the atmosphere. Because there is often confusion about the difference between weather and climate, keep in mind that the climate is just the weather averaged over a long period of time. The atmosphere around us is an invisible fluid (at least to the human eye): we can apply math to that fluid to predict how it will look in the future. Check out this website for a representation of that fluid in motion.

In 1904, Vilhelm Bjerknes, a founder of modern meteorological forecasting, wrote:

“If it is true, as every scientist believes, that subsequent atmospheric states develop from the preceding ones according to physical law, then it is apparent that the necessary and sufficient conditions for the rational solution of forecasting problems are: 1) A sufficiently accurate knowledge of the state of the atmosphere at the initial time, 2) A sufficiently accurate knowledge of the laws according to which one state of the atmosphere develops from another.”

In other words, if we know the right mathematical equations that predict how atmospheric motion and energy transfer work, and we also know how the atmosphere currently looks, then we should be able to predict what the atmosphere will do in the future!

So why didn’t Vilhelm try to predict the weather in 1904 if he knew it could be done? Well, at the time there was unreliable and poor coverage of observations for how the atmosphere looked. Vilhelm also knew that there would be an immense number of calculations, too many to do by hand, that go into calculating the future of the state of the atmosphere.

It wasn’t until the early 1920s that Lewis Fry Richardson, an English mathematician and physicist, succeeded in doing what had been, until then, thought of as impossible. Using a set of equations that describe atmospheric movement, he predicted the weather eight hours into the future. How long did it take him to carry out those calculations? Six weeks. So you can see it wasn’t possible to make any reliable weather prediction with only calculations done by hand.

Computers changed the forecasting game

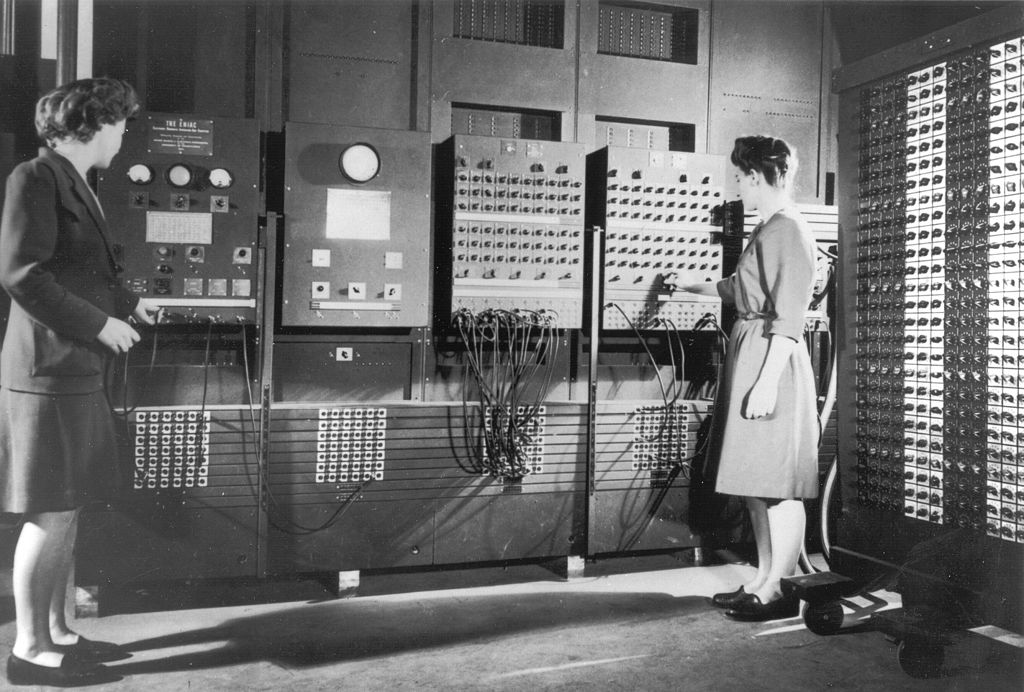

So how can we predict the weather and climate so well today? Computers! In 1945, the Electronic Numerical Integrator and Computer (ENIAC) was invented in the United States. ENIAC was the first electronic computer that could perform an impressive number of calculations in seconds (here, “impressive” is relative to the era, as ENIAC could make about 5,000 calculations per second, while today’s iPhone can make billions of calculations per second). Note that there were computers before ENIAC, but they didn’t rely on electricity and weren’t as complex.

Originally, this electronic computer was used for nuclear fallout calculations, but believe it or not, the second use for ENIAC was to perform the calculations necessary to predict the weather. Why? Because weather prediction was the perfect overlap of applied mathematics and physics that required the quick calculations of a computer.

In 1946, one year after the ENIAC was finished, John von Neumann, a Princeton mathematician who was a pioneer of early computers, organized a conference of meteorologists. According to Jule Charney, a leading 20th century meteorologist, “[to] von Neumann, meteorology was par excellence the applied branch of mathematics and physics that stood the most to gain from high-speed computation.”

Von Neumann knew that with ENIAC we could start predicting both the short-term weather and the longer-term climate. In 1950, a successful weather prediction for North America was run on ENIAC, setting the stage for the future of weather and climate prediction.

Who were the six programmers that ran these calculations? Jean Bartik, Kathleen Antonelli, Marlyn Meltzer, Betty Holberton, Frances Spence, and Ruth Teitelbaum, all women mathematicians recruited by the military in 1942 as so-called “human computers”. I mention this briefly here because women’s contributions to the advancement of computer technology and weather forecasting is often overlooked. During Women’s History Month it is even more important to elevate and remind folks of their critical contributions.

The first climate model

The weather forecast run on ENIAC in 1950 was for only a short period of time (24 hours) and included only North America. To successfully model the climate, we would need a model to simulate decades of Earth time that covers at least one hemisphere of the Earth.

The first general circulation model (GCM)—what climate scientists call climate models— was developed by Norman Phillips in 1956 using a more sophisticated computer than ENIAC that could handle more calculations. However, this GCM was primitive in nature.

After Phillips successfully demonstrated the climate system could be modeled, four institutions in the United States—UCLA, the Geophysical Fluid Dynamics Laboratory (GFDL) in Princeton, the National Center for Atmospheric Research in Boulder, and Lawrence Livermore National Laboratory—independently developed the first atmosphere-only GCMs in the 1960s. Having four models developed slightly differently contributed to the robustness of the discipline early on in climate modeling.

And thus, the age of climate modeling was born. These GCMs could predict the future state of the atmosphere and its circulation given any change to atmospheric composition (such as heat-trapping pollutants), which is the main application of GCMs to this day.

Today’s climate models

GCMs are much more sophisticated today than they were in the 1960s. They have higher resolutions (meaning they perform more calculations per area), they are informed by better physical approximations, and they can replicate the climate much better. With each decade since their conception, different earth system models that simulate phenomena such as carbon cycling, vegetation, and aerosols have been added, improving GCM complexity and accuracy. Present-day GCMs consider changes in not just the atmosphere, but also changes in the ocean, the land, and sea-ice (see figure below).

When scientists run a climate model, they are actually running four different models: one for the atmosphere, one for the ocean, one for the land, and one for sea-ice, simultaneously. These four models then communicate with each other through something called a “coupler” during the calculation stage.

So, for example, if the ocean model says the temperature of the ocean surface changes by 3°C given a doubling of CO2 in the atmosphere, this information is then relayed to the atmospheric model, which can then respond and change the atmospheric circulation based on that temperature change.

For more information on what a climate model is, how it works, and how climate scientists use them, check out my climate model explainer blog coming soon.

Climate models improve incrementally through decades of scientific work

Climate models are some of the most reliable models in existence because they have been built upon, tested, and corrected for decades. And while there are some problems we’re still working on correcting, they can replicate the climate system overall with incredible skill and accuracy.

Climate models are at the foundation of the scientific consensus around climate change. And at UCS, we use climate models to advance our understanding of the climate system in order to predict how communities are affected by a changing climate, and, importantly, to know who to hold accountable for the climate crisis.